With distributed computing gaining popularity, terms like cloud computing and edge computing are becoming increasingly common. These aren’t just meaningless buzzwords to spark interest in a trend, but existing technologies driving innovation across industries.

Cloud computing and edge computing are critical components of the modern IT system. But what exactly do these technologies entail? And how do they stack up against each other? Let’s find out.

An Introduction to Cloud Computing

We have all used Dropbox or OneDrive to backup up our important files and data. The data is said to be stored on the “Cloud,” but what does it mean?

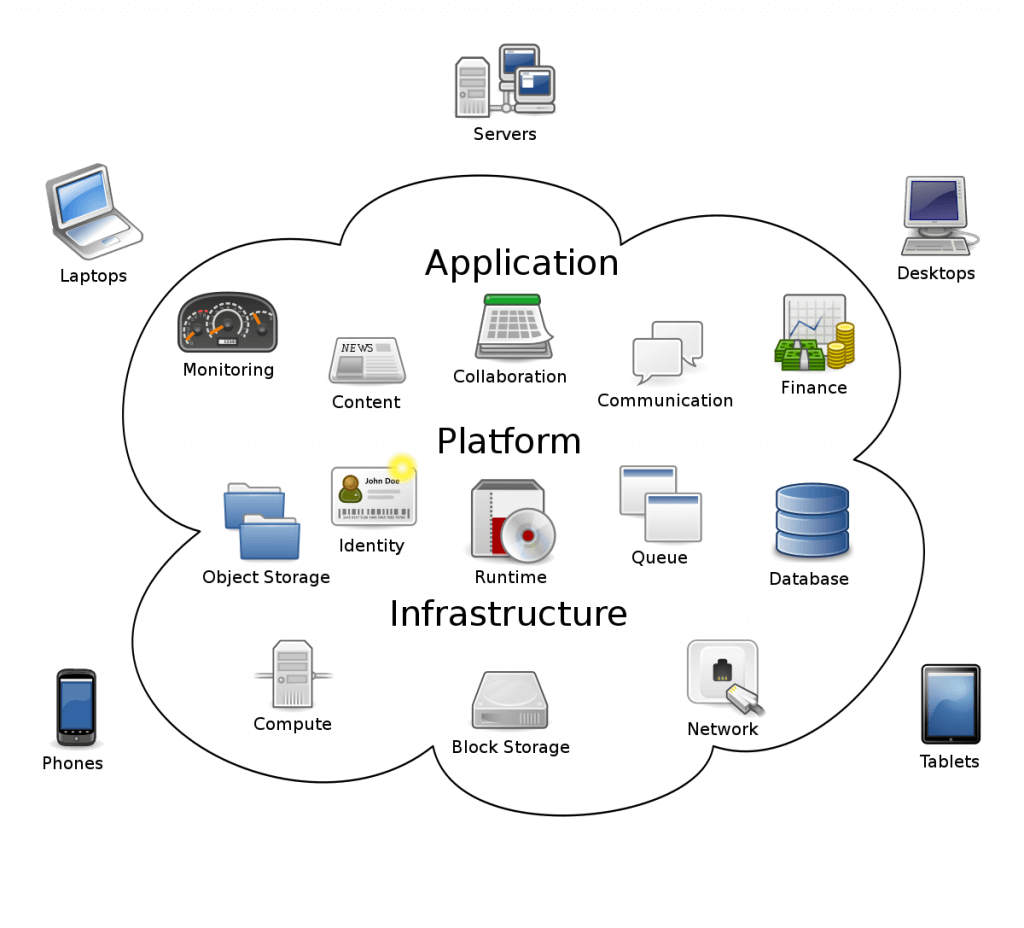

The Cloud, simply put, is a collection of computing resources accessible over the internet. The idea is that you can use industrial-scale hardware located anywhere in the world cheaply and securely.

Traditionally, companies were forced to set up and maintain large servers for their in-house computing needs. This incurs high costs, not to mention the lack of flexibility. Moving an application to the cloud allows a company to abstract away the hardware backend, requesting as many resources as needed.

It has become routine for websites and other applications to be served entirely from the cloud, greatly simplifying the technology stack. Services like Amazon AWS and Microsoft Azure are frontrunners in this space, powering all sorts of applications for companies worldwide.

Pros

- Scalable: Cloud services can be ramped up as and when required, providing flexibility to applications without hard investments.

- Cheap: It is more cost-effective for a service provider to run large centralized server farms than for each firm to set up its own computers. This allows cloud services to be made available at a much lower cost than traditional setups.

- Simple: Setting up and managing an in-house database and API backend is no easy undertaking. It is easier to abstract the hardware away and request computing resources as required.

Cons

- Network Dependent: The main issue with cloud services is complete network dependence. Cloud services are not a solution for remote areas with poor network connectivity.

- Slow: Depending on the location of the cloud servers, communication can take from a few seconds to several minutes. That delay is too much in applications requiring instant decisions (such as industrial equipment).

- Bandwidth Intensive: As the cloud servers are responsible for computation and storage, a lot of data needs to be transmitted. Bandwidth requirements are expensive in scenarios that generate vast information (AI, video recording, etc.).

Edge Computing Explained

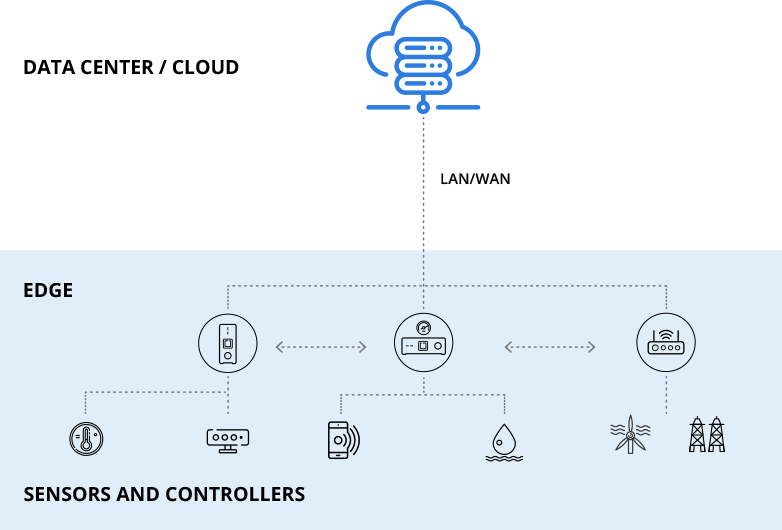

A problem with cloud computing is its dependence on the network. This is not a problem for most tasks, but some applications are extremely time-sensitive. The delay in transmitting data, performing the processing on the cloud, and receiving the results is slight but perceptible.

Then there is the issue of the bandwidth. Applications involving video processing or AI algorithms work with large amounts of data, which can be expensive to transmit to the cloud. More so if the data collection occurs in a remote location, where network connectivity is limited.

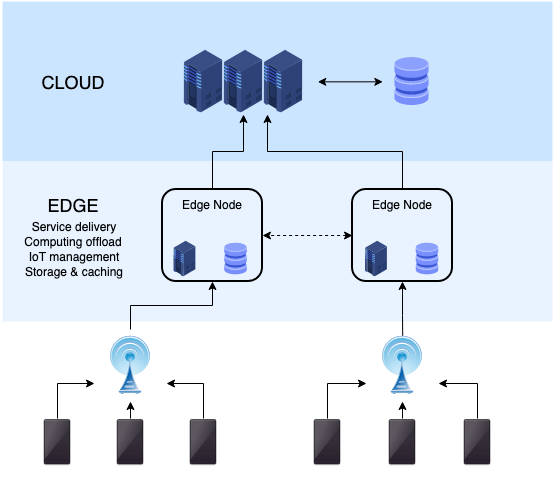

Edge computing delivers an answer to these problems. Instead of sending the data to a server halfway across the world, it’s stored and processed on-site, or at least at a nearby location.

This has the advantage of saving data transmission costs and removing the factor of network latency. The computation can take place immediately, giving the results in real-time, which is vital for many applications.

Pros

- No Latency: As the edge computer is located at the source of data, there is no network latency to contend with. This gives immediate results, which is important for real-time processes.

- Reduced Data Transmission: The edge computer can process the bulk of the data at the site, transmitting only the results to the cloud. This helps reduce the volume of data transfer required.

Cons

- More Expensive than Cloud: Unlike cloud computing, edge computing requires a dedicated system at each edge node. Depending on the number of such nodes in an organization, the costs can be much higher than cloud services.

- Complex Setup: With cloud computing, all we need is to request resources and build the application frontend. The nitty-gritty of the hardware carrying out those instructions is left to the cloud service provider. In edge computing, however, you need to build the backend, taking into account the application’s needs. As a result, it is a much more involved process.

Cloud Computing Vs. Edge Computing: Which One Is Better?

The first thing that you must understand is that cloud computing and edge computing are not competing technologies. They are not different solutions to the same problem but separate approaches altogether, solving different problems.

Cloud computing is best for scalable applications that need to be ramped up or wound down according to demand. Web servers, for example, can request extra resources during periods of high server load, ensuring seamless service without incurring any permanent hardware costs.

Similarly, edge computing is suitable for real-time applications that generate a lot of data. Internet-of-Things (IoT), for example, deals with smart devices connected to a local network. These devices lack powerful computers and must rely on an edge computer for their computational needs. Doing the same thing with the cloud would be too slow and unfeasible owing to the large amounts of data involved.

In short, both cloud and edge computing have their use-cases and must be chosen according to the application in question.

The Hybrid Approach

As we have said earlier, cloud computing and edge computing are not competitors, but solutions to different problems. That begs the question; can they both be used together?

The answer is yes. Many applications take a hybrid approach, integrating both technologies for ultimate efficiency. For example, industrial automation machinery is usually connected to an on-site embedded computer.

This edge computer is responsible for operating the device and performing complex calculations without delay. But at the same time, this computer also transmits limited data to the cloud, which runs the digital framework managing the entire operation itself.

In this way, the application makes full use of the strengths of both approaches, relying on edge computing for real-time computation while using cloud computing for everything else.

Which Is the Best Distributed Computing Technology?

Edge computing is not an upgraded version of cloud computing. It is a different approach toward distributed computing that comes in handy for time-sensitive and data-intensive applications.

However, cloud computing is still the most flexible and cost-efficient approach for most other applications. By offloading storage and processing to a dedicated server, companies can focus on their operations without worrying about backend implementation.

Both are essential tools in the repertoire of a savvy IT professional, and most cutting-edge facilities, whether IoT or otherwise, leverage a combination of the two technologies to get the best results.