If you have spent any time at all debating between monitors, then you’ve encountered the terms “G-Sync” and “FreeSync.” Unless you are a gamer looking for better performance, the terms likely don’t mean much to you.

But knowing what the terms mean–and how adaptive sync technology works–will help you make the best decision about what monitor to use.

What is Adaptive Sync?

Both FreeSync and G-Sync are both forms of adaptive sync. If you have ever played a game and experienced screen tearing, juttering, or other graphical errors, you know how disruptive the experience can be.

These errors often happen because the frame rate delivered by the graphics processing unit (GPU), of a computer and the refresh rate of the monitor do not line up. Adaptive sync is the process by which the refresh rate of the monitor is matched to the frame rate of the GPU.

Monitor Refresh Rates

The majority of display problems occur because of a disparity between refresh rates. Most modern monitors refresh around 60 times per second, or 60 Hz. However, there are also 75 Hz, 120 Hz, 144 Hz, and even 240 Hz monitors. These devices can deliver better performance if you have a graphics card that can deliver higher frame ratess.

Screen tearing and other graphical issues occur when the refresh rate of the monitor and the frame rate produced by the GPU do not match up. You can spot a screen tear as in the above screenshot when the top half of the screen image is out of sync with the lower half.

Think of it like this: older games are usually not graphically intensive, so adaptive sync isn’t needed to match the frame rate of the GPU with the refresh rate of the monitor.

On the other hand, more modern titles can strain even high-end GPUs. Microsoft Flight Simulator is a good example; even a high-end gaming computer might have a hard time producing more than 30 to 45 frames per second.

When the framerate of a game is higher than the refresh rate of a monitor, the screen will tear and stutter as the display won’t be able to keep up.

FreeSync Vs G-Sync

Both FreeSync and G-Sync are designed to smooth out the display of an image on a monitor, but they approach this through different methods. Both technologies differ on a hardware level, as well. The primary difference between the two of them is this: FreeSync is an AMD technology, while G-Sync is an NVIDIA technology.

What is Freesync?

FreeSync is used by AMD graphics cards, so it is not an option available to NVIDIA users. It also isn’t available on every monitor. Only displays that support VESA Adaptive-Sync can utilize FreeSync. Compatible monitors let their internal boards handle all of the rendering and processing to correctly display the image. FreeSync works over both HDMI and DisplayPort.

The FreeSync brand isn’t just haphazardly applied to compatible monitors, however. The displays are put through a strict series of tests and must meet certain benchmarks in order to qualify for the FreeSync branding.

In terms of cost, FreeSync-compatible monitors tend to be more affordable than the comparable G-Sync monitors. This is primarily because FreeSync uses the open-source standard created by VESA.

What is G-Sync?

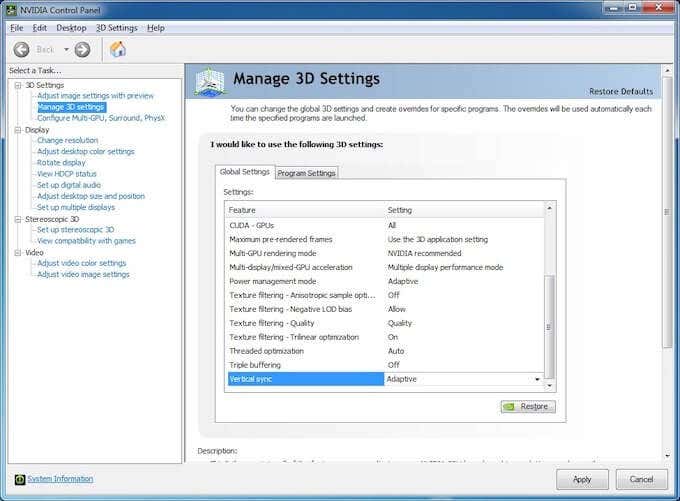

G-Sync is NVIDIA’s adaptive sync alternative to FreeSync. While FreeSync uses an open-source program as its base, G-Sync relies on a proprietary chip for rendering and processing. This results in G-Sync compatible monitors carrying a slightly higher price tag. Thanks to this, there is a general sense that G-Sync is the superior technology–but this is not quite true.

There are three primary types of G-Sync technology: G-Sync, G-Sync Ultimate, and G-Sync Compatible. G-Sync is the standard option, while G-Sync Compatible is a more budget-friendly option. G-Sync Ultimate is the most expensive choice, as the monitor has to meet exceedingly strict standards to qualify.

FreeSync Vs. G-Sync: Which One is Better?

Choosing between FreeSync and G-Sync is more complicated than it may appear, but there is one aspect that makes it a simple choice. If you have a machine with an AMD graphics card and you have no plans to swap it out, FreeSync is your only option.

On the other hand, if you have an NVIDIA graphics card, G-Sync is your pick. If you are building a machine from the ground up, more variables come into play.

At lower resolutions, the performance difference between the two technologies is more difficult to notice. At 1080p and 60Hz, you can see a difference, but it is often small and not worth the extra cost. If you plan to push higher performance but want to save money where you can, FreeSync is friendlier on the wallet.

If you are aiming for the absolute highest performance, especially with 4K and HDR, then choose G-Sync. While FreeSync is perfectly adequate and performs quite well in most situations, G-Sync is superior at higher performance levels.

The G-Sync Ultimate level outperforms Freesync at every turn, and NVIDIA is the current market leader with regard to GPUs. If graphics make or break your experience, stick with G-Sync.

Related Posts

Patrick is an Atlanta-based technology writer with a background in programming and smart home technology. When he isn’t writing, nose to the grindstone, he can be found keeping up with the latest developments in the tech world and upping his coffee game. Read Patrick’s Full Bio